Philosophy’s Jason Millar talks to the Faculty of Arts and Social Sciences about the ethical implications of technology.

The research of Jason Millar (Department of Philosophy) examines the social and ethical implications of technology in our rapidly transforming and increasingly gadget-centric world.

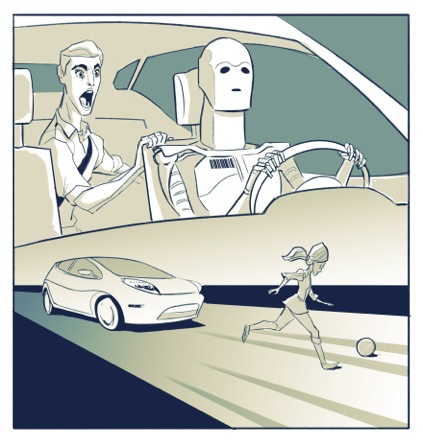

Millar is an engineer who returned to University to study Philosophy, and now finds himself asking tough ethical questions such as “should your driverless car kill you to save the life of a child?”

Millar recently sat down with FASS to discuss his work and the important role the arts and social sciences will play in assessing our reliance on Artificial Intelligence and semi-autonomous technology in our everyday lives.

Q – Why did you make the decision to come back to school and study Philosophy? How does your background as an engineer influence your current research?

JM: In engineering we don’t get to take many breadth electives, but I did take a couple, one of them was a course in Political Philosophy. A few years after graduating, while I was working as an engineer, I found that I couldn’t shake the philosophy course. It was in my head, so to speak. John Rawls’s thought experiment involving the veil of ignorance and original position impressed me in the way it provided a practical approach to addressing issues of social justice. I don’t think it’s a far stretch to see why this would appeal to an engineer. I saw Rawls’s argument as a means of “designing” society, a sort of social blueprint, and a lot of philosophy can certainly function in that way. You make an argument and, if it sticks, the world changes. It’s a slower change, and it isn’t as easy as designing a widget, but it’s a change nonetheless. In that sense I’ve always thought that philosophy and engineering have a lot in common.

Being an engineer has had a huge impact on my research choices. Having designed different kinds of technology, when I read philosophy I always ask whether and how it can inform the engineering profession. How could a particular theory in ethics, for example, define appropriate goals for engineering practice? Does a particular argument problematize the kinds of activities that engineers take for granted? How, exactly, does technology come into the world and could we improve that process? What would it mean to improve that process? To what extent must we consider the user in our design activities? These kinds of questions strike me as a natural fit between philosophy and engineering.

Q – Why is it important that we attain an understanding of the potential implications of these technologies?

JM: I’m currently studying a set of design problems that seem unique to, or at least more prominent in relation to, sophisticated automation technologies. As robotics and other semi-autonomous technologies advance in their sophistication, more and more decision-making algorithms are embedded in them. It turns out that in some cases the kinds of automated decisions that technology can make have significant moral implications for users.

Here’s an example that illustrates the point. I call it the Tunnel Problem: “You are travelling along a single-lane mountain road in an autonomous car that is fast approaching a narrow tunnel. Just before entering the tunnel a child errantly runs into the road and trips in the centre of the lane, effectively blocking the entrance to the tunnel. The car is unable to brake in time to avoid a crash. It has but two options: hit and kill the child, or swerve into the wall on either side of the tunnel, thus killing you. How should the car be designed to deal with this kind of scenario?”

From a technical perspective an engineer might think the solution is straightforward. She might say something like, “we can just poll individuals to see what they would want the car to do and then hard code the majority’s answer into the vehicle.” But that would be a mistake. The tunnel problem isn’t a technical problem; it’s a deeply personal ethical problem. And if you ask people the right questions, you can see the difference in action. The Open Roboethics Initiative, a group I’m involved with, polled people to see how they would want the car to respond and a surprising number of people, close to 40%, chose to hit the wall. We also asked readers whom they thought should make the decision, and the results were interesting. Only 12% of respondents thought that manufacturers should be making the decisions, while 44% thought the passenger should have the final say in the outcome.

I interpret these results as an indication that engineers need to think carefully about the ethics underlying certain automation design features. Even if the majority of people would want the car to save them and sacrifice the child, hard coding that decision into the car would confound the moral preferences of a significant number of users!

When I look around I find that this kind of problem comes up in all sorts of automation technologies, including medical implants and robotics, social robotics, Facebook and other social media—the list is growing. There are a growing number of technologies that have the capacity to make sophisticated, often deeply moral, decisions on behalf of the user in cases where, from an ethical perspective, the decision should be left to the user. By taking the decision away from the user, engineers and designers undermine users’ moral preferences.

Part of my work involves designing ethical evaluation frameworks that engineers and designers can apply in the design process in order to avoid falling into the hard coding trap. In the end, I believe that we can design automation technologies that account for users’ moral preferences, that are trustworthy, and trusted, so long as we take these kinds of ethical considerations into account during the design phase. Given the number of automation technologies that are in the design pipe, we need to get a handle on the ethics of automation sooner rather than later.

Q – What do you believe are some specific areas of ethical concerns that we presently face, or are likely to confront in the foreseeable future?

JM: In the Robot Ethics course I teach here at Carleton, I underscore a number of areas that are of growing ethical concern. Military drones are raising important questions. To what extent should we automate them? Should military robots be able to target and fire without human oversight? Medical robotics is also raising interesting ethical issues. Who gets to have access to the vast data that a carebot working in a patient’s home generates in the course of caring for that patient? Is it ever ethical to design a carebot to deceive a patient? Is it ethically acceptable to replace human caregivers with robots? Social robotics, represented by robots like Jibo and Nao, are another emerging area. Given that those robots are designed to be embedded in a user’s social sphere, elicit trust and engage users in social relationships (e.g. friendships), to what extent can they be designed to function as an agent of the corporation selling them? What kinds of informed consent requirements are appropriate for those robots? Does the age of the user change these considerations? Autonomous cars are raising a number of issues. Who should decide how cars react in difficult moral situations? Can an autonomous car be designed to intentionally inure a passenger in order to “distribute” the overall harm in an accident more evenly? Should owners be able to pay to have their cars get them around faster than other people?

The truth is that the more automation technologies we imagine, the more ethical issues are raised. It’s a really interesting time to be thinking about the philosophy and ethics of technology.

Q – What do you believe is our ‘best practice’ as we prepare to deal with these newfound realities?

JM: I recently participated in a workshop at a large human robotics interaction conference. What struck me was the number of engineers and designers who recognize that our traditional way of conceiving engineering ethics—as professional ethics—needs to be broadened in order to deal with the kinds of design issues that seem unique to the context of automation technologies. The workshop participants agreed that the only way to adequately address these issues is an interdisciplinary approach that puts traditional technical disciplines working alongside philosophers and ethicists, policymakers, lawyers, psychologists, sociologists and other experts in the humanities and social sciences.

From my own perspective I think what needs to happen is a broadening of what we consider within the domain of “engineering ethics”, to include a more philosophically informed analysis of technology. When I look at the recent focus on privacy spurred by the Internet, and now the growing focus on robot ethics, I think the engineering profession is experiencing a shift in the way that engineering ethics is conceived. This happened in medicine. The medical profession experienced a broadening out from professional ethics, to reconceive medical ethics as requiring a philosophically informed bioethics in the mid to late twentieth century. Coincidentally, it was the introduction of new technologies—for example, artificial insemination, sophisticated life-supports, genetics, and so on—that played a big role in ushering in that shift. Automation technologies are doing the same to engineering. The kinds of ethical issues I study, like the tunnel problem, require a fundamental shift in how we (engineers) conceptualize the ethical dimensions of technology and our design work. We can’t tackle these issues in a vacuum; we need to enlist other experts to get the work done.